@cyberzork said: eM Client I suspect would have to be special modified to support all menus using voice control… So would all depends on […market size…]

That is why I wrote the long explanation in https://forum.emclient.com/t/emclient-support-for-accessibility-via-speech-recognition-navigation-of-menus-etc,

Frankly, I don’t want any special modifications to support Microsoft SAPI (Speech API). That’s a lot of work, and frankly it’s nowhere near as good as you might think it would be. IMHO the most speech friendly application that I use is good old emacs, totally non-speech-aware: it wins because it has a command language, not because It exposes the menus. Frankly navigating menus by voice is tiresome and inefficient.

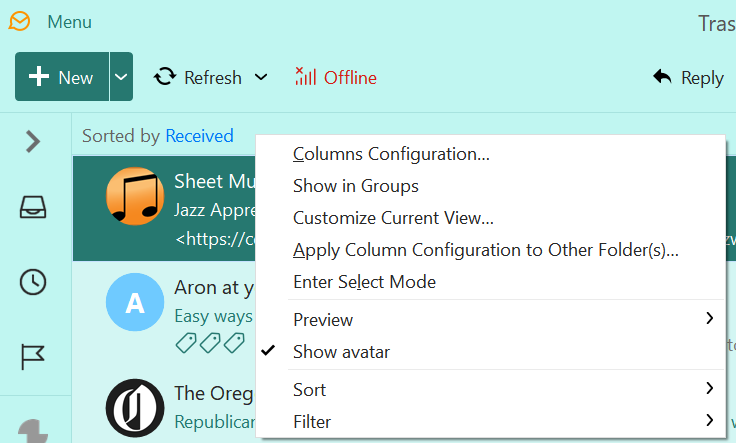

All I want is keyboard navigation of the GUI, the menus and buttons. This is what I call “consistent keyboard only” usage of the GUI. It is much more reliable to automate by having the speech control program emit keyboard events. The speech control program can emit mouse events, but moving the mouse around by voice is even more tiresome than navigating menus by voice.

A consistent keyboard only UI just make sure that there is a way to move the focus to any GUI item by keyboard commands. Possibly just a single keyboard command, like BackTab to go from one GUI element to another. Ideally also there is a way to move directly to one or more known good fixed places, so that other GUI elements can be reached with keyword sequences that do not change.

It’s not that hard.

It also has a big advantage: it makes it a lot easier to automate software testing. Sure, you probably have a test set up that will move a mouse pointer around and send events. (If you don’t, then a few years ago I could have sold you one.) But unless your test setup is scraping the screen pixels to see where it should move the mouse pointer to… Well, far too many companies don’t really test this except on a few configurations of displays and window sizes, because minor rendering changes ingeniously scaling can break your test. Consistent keyboard interfaces don’t fix the mouse part, but they give you a way of completely testing your GUI via the keyboard interface. And that makes it easier to test the display and window size dependent mouse pointer stuff, because you don’t need to use it for the basic functionality test. (If y’all know about Agile Programming, Kent Beck says that he came up with the unit testing approach as a way of avoiding as many problems as possible related to mouse driven GUI testing.)

As for the market: It is not just the number of people who use. That small market is multiplied by ADA (Americans with Disability Act) requirements that computer systems used by the federal government and organizations that it funds must be accessible. Most private companies try to follow ADA requirements, so that they are allowed to receive US federal government funding. For organizations and companies trying to do this, if they are considering purchasing an email program, and if there are two or more email programs in the market, one of which is accessible and others of which is not, Then they can purchase just the accessible software, or they can purchase the less accessible software for general use but also have to purchase fewer copies of the accessible software for employees who need to use it. Which do you think an IT department prefers?: Having to maintain two different versions of software, or one-stop shopping? Risking ADA lawsuits?

Anyway, I don’t like the ADA lawsuit trolls that are becoming more and more common.

I’m just trying to teach people that is really pretty easy to create a consistent keyboard GUI. You already do hotkeys and menu accelerators, you just need to do them a bit more carefully.

Beware of accessibility consultants who tell you how much work and $$$ you will need to invest in accessibility. I don’t know if it is because they have self-interest in increasing the dollars spent, or if it is because so many of them aren’t really programmers or UI designers, but many of them exaggerate the difficulty.